Google's AI is incorporating the search function, which is how it can generate an accurate explanation of the kinds of materials classed outside of Dewey 200 and generate the correct book titles. But the text-prediction aspect of the genAI is interfering with accurate results, which is why its making mistakes. This would be a great example to show a class to illustrate the perils of using AI for search.

Out of curiosity, I repeated your search and this time it did generate three titles that are real works and that are classed outside of 200. I wonder if the results become more accurate as more people repeat the same search? However, the intro paragraph implies that all the books it's about to cite are in the 300s. The third, A History of the Crusades by Runciman, is actually in the 900s. It's also worth noting that the Dewey discussed in the third link under "Learn More" is John Dewey, not Melvil.

It demonstrates how perilous it is for nonexperts, especially undergraduates, to use genAI as a search tool. You have to know enough about your subject to know what does and does not need to be verified.

------------------------------

Noelle Barrick

Director

WSU Tech Library

------------------------------

Original Message:

Sent: Jan 23, 2025 11:24 AM

From: Marc Meola

Subject: Google's AI Search example

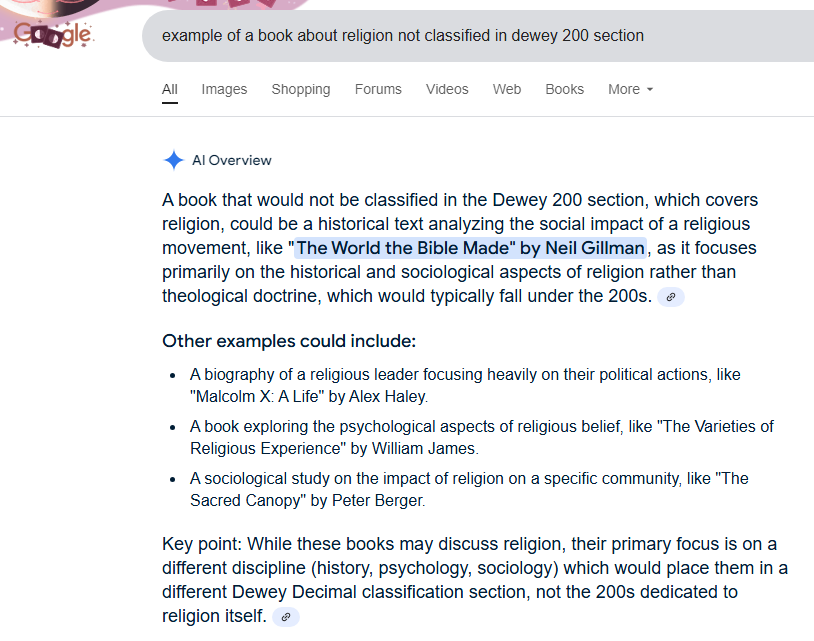

I'm wondering how Google's AI generated this answer, which is on target (besides the incorrect citations). This seems to me to be more than text prediction on a large language model. Or is it?

Also, frustratingly, of the citation examples, the first book does not exist, the second has the wrong title, the third is in fact classified in 200, and only the fourth is a good example of the subject in question. Do we know why AI is so bad at citations?

------------------------------

Marc Meola

Assistant Professor in the Library

Community College of Philadelphia

He/Him/His

------------------------------